CHAPTER 14 |

THE FUTURE OF |

This chapter will try to predict how chip and system design will look like five years from now and how prototyping will be impacted, and vice versa. We will again consider prototyping in its many forms and FPGA-based prototyping within that f ramework.

14.1. If prediction were easy…

… then flip-flops wouldn’t need set-up time (pardon our little engineering joke).

As they say, predictions are difficult, especially about the future. However, in the case of prototyping, the general direction for the next few years can be predicted pretty well. We can look back at chapter 1 and recall the industry trends for semiconductor design and our 12 prototyping selection criteria. We can then use these insights to predict how our need for prototyping might evolve in the coming years. Of course, different SoC designs in various application areas have different needs, so we shall start by examining the specific needs of three important application areas.

14.2. Application specificity

When looking into the crystal ball it becomes clear that chip development needs are highly application-specific. The application areas targeted by a design will, to some extent, govern its development methods and timescales. However, the common denominator will always be the software, which increasingly determines system functionality and changes the very way that hardware is designed in order to efficiently run that software.

Today – in late 2010 - we have already reached a point at which the application domains significantly determine chip design requirements, most importantly on the path from idea to implementable RTL combined with software, and subsequently its verification. Given that different application domains use different IP and interconnect fabrics as well as have different software development requirements, design flows are increasingly become even more tailored to particular application domains.

Let’s compare the trends in three typical domains, specifically wireless/consumer, networking and automotive.

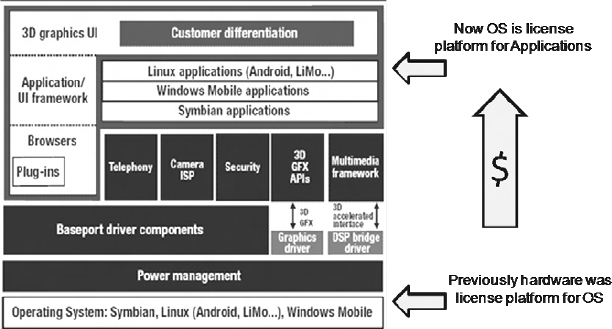

14.3. Prototyping future: mobile wireless and consumer

The user experience in wireless and consumer applications is today already mainly determined by applications running in higher-level, hardware-independent development platforms like Java™ and specific SDKs. Figure 165 shows the trend from proprietary operating systems towards open operating systems, which encourage application developments mostly from third-party developers. Since the introduction of online application stores, some consumer hardware has become the delivery vehicle for distribution of applications, which are controlled by the operating system that enables them. Application developers are offered a distribution vehicle and in exchange they pay a percentage back to the environment’s proprietor. The result is that, in a unique way, the OS providers have found a vehicle to monetize the end applications rather than hardware platforms.

Figure 165: The shift from proprietary operating systems to application driven design

In the past, the operating systems themselves used to be quite profitable licensing businesses then in 2008 there were some sudden changes with handset operating systems being acquired by major vendor, while conversely we saw the release of the Android operating system. Several handset providers also released their own proprietary operating system platforms. At the time of writing in 2010, Microsoft®

Windows Mobile™ has become the only widely-used operating system that still charges license fees to mobile handset manufacturers.

Instead of making the OS a “licensable product,” the new model is that the handsets and the embedded OSs running on them have now become a channel to provide applications of astonishing depth and variety. We estimate that users have a choice of more than 500,000 different applications across the various operating systems and platforms, accessible though various online application stores. For mobile applications this defines a fundamental shift in where the business value lies and it is unlikely to be reversed.

What does all this mean for chip design and prototyping? The effect on development is that because the value is moving into applications, software will increase even further in importance and its development needs will take precedence. In effect, the software will increasingly govern how the hardware is designed. In the case of mobile wireless and consumer applications this means that hardware developers need to provide fairly generic execution engines as early as possible and independent from the actual hardware. For our 12 prototyping selection criteria, this means that replication cost and time of availability will be of highest importance. The sheer number of application developers will require a very inexpensive way to develop applications, which will push more capabilities into SDKs. Time of availability will be important and in a sense hardware and software development will increasingly be done upside down – with the software being available before the hardware and the hardware designed to execute OS-based software in the most efficent way.

While the trend to isolate software development from hardware effects using hardware abstraction layers and OSs will strengthen even further, user expectations for high quality applications will grow and as such application verification will also gain importance.

Future SDKs will have to provide some of the application verification capabilities which already exist today for other software development environments like virtual platforms and host development environments. For example, software memory checking is a well-known technique in the host workstation space and quality verification tools such as Valgrind, Purify, BoundsChecker, Insure++, or GlowCode are part of any industry-strength software design flow. However, these types of tools are not generally available or widely used in the embedded world. SDKs and virtual platforms are the appropriate prototyping areas to which these capabilities should be added.

There will still be a need for FPGA-based prototyping for the lowest levels of the software stack where speed and accuracy are needed at the same time. In addit ion, the needs of the hardware platform do not become any more relaxed. For example, the leading platforms will be low power and high capacity while providing highest quality multimedia, versatile interfaces and all in a reliable, low-cost and small format package. This means very advanced SoC designs and many overlapping projects in order to introduce new models at the rate that market leadership demands. Relentless and accelerated SoC project development demands reuse of FPGA-based prototyping methodology. We simply will not have time to re-invent wheels and methods or create large-scale prototyping hardware for every design. An in-house standard platform strategy and Design-for-Prototyping methodology will be required to keep all those software-dominated projects on schedule.

14.4. Prototyping future: networking

In contrast to the mobile wireless and consumer application spaces, networking is not determined by end-user applications but completely driven by data rates and per-packet processing requirements. Nevertheless, given the need for product flexibility and configuration options, software is once again used to determine processing functionality and the hardware is increasingly designed to prioritize the efficient execution of the software.

As already outlined in chapter 1, the networking application domain is moving towards architectures using multiple CPU cores and underlying flexible interconnect fabrics. As predicted by ITRS and shown in chapter 1, the die area will remain constant, the number of processors per design is predicted to grow by 1.4x every year, on average. This will have a profound impact on the future of prototyping given that the application partitioning between the processors has to be properly tested and verified prior to silicon production.

Considering multimedia applications briefly, a fair amount of the tasks to be distributed between processors can be pre-scheduled. Proper execution can be verified using virtual prototypes as they allow very efficient control of execution as well as the necessary debug visibility into hardware and software co-dependencies. For a networking application, however, incoming traffic is distributed to packet processors and on-chip accelerators. Given the inherently parallel nature of packet processing, the choice of compute resources is done at runtime, which means that less pre-scheduling is required. Nevertheless, the various options of runtime scheduling will need to be prototyped prior to committing to silicon, at as realistic speed as possible. FPGA-based prototypes and virtual prototypes will be enhanced to collect appropriate performance and debug data to optimize scheduling algorithms.

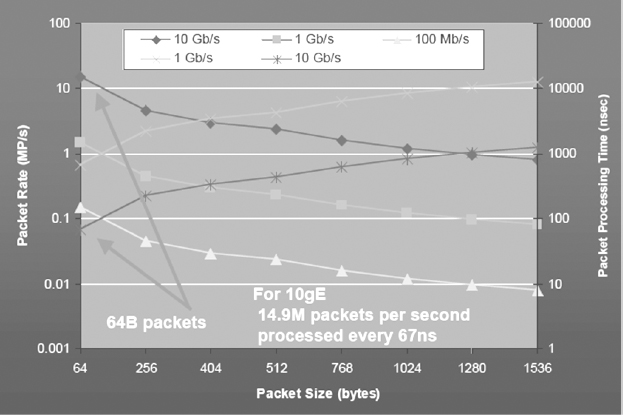

How the packet rates increase for different transfer rates and how packet processing times get shorter is illustrated in Figure 166.

Figure 166 : Packet rates and processing times (Source: AMCC)

As an example for 10Gb Ethernet, 14.9 million packets have to be processed every 67 nanoseconds. To allow any kind of prototyping, both capacity and execution speed of prototypes will have to improve. For virtual prototypes it is likely that this can only be achieved using parallel, distributed simulation. FPGA prototypes will have to overcome capacity limitations using improved scheduling and partitioning algorithms as well as intelligent stacking of prototypes themselves.

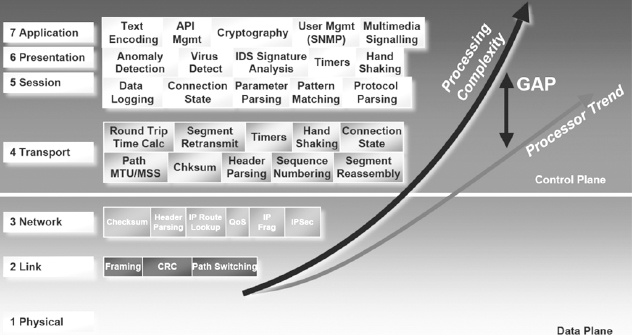

Figure 167 illustrates how the different functions performed in the networking control and data plane have evolved over time as processors have become more capable. It also shows that the complexity of processing demands has been outpacing the development of new processors. Developers have therefore moved to multiple processor cores and the most complex challenge has become to efficiently partition tasks across them.

In the control plane, the “in-band control traffic” is handled with the actual connection processing for routing/session establishment of the network protocol in use.

Figure 167 : Functionality distribution in Data and Control Plane (Source: AMCC)

Taking into account the underlying multi-processing nature of the hardware, task distribution is important. Each “task” within the control processing is complex and offers limited internal parallelism. The tasks themselves are fairly independent and can be assigned individually to dedicated CPUs.

Unfortunately, network applications have processing loads that come in bursts, which leads to CPUs that are powerful enough to handle the peak load, but otherwise underused. As a result, these very capable processors are not an optimally efficient use of silicon. Given the dependency of the processing requirements on the actual networking traffic it is difficult, if not impossible, to assign tasks at compile time to different processors.

The data plane functionality is focused on forwarding packets and translates information from control traffic into device-specific data structures. The data plane’s code is packet-processing code and easy to run on multiple cores operating in parallel. This code can be more easily partitioned across multiple cores, because packets can be processed in parallel as multiple cores run identical instances of the packet-processing datapath code.

Again, much like for control code, the actual assignment of processing units to tasks is highly dependent in the actual network traffic and should be done at runtime. Assignment of tasks to processors at compile time is, again, difficult if not impossible.

We predict that prototyping of networking systems will largely continue to be done in a hierarchical fashion. The individual processing units will continue to be tested against their packet-processing requirements but, given the increased complexity of those requirements, it will become too risky to commit to hardware without proper prototyping.

As discussed earlier, in contrast to mobile wireless and consumer applications the assignment of processing tasks to processing units is not done at compile time and is also not separated as a set of user applications from the hardware through operating systems. The type of software used in networking applications is much more “bare metal” and tightly coupled to the processors upon which it runs. Hardware dependency in software is difficult to model without the hardware being present in some form. Hence software debug is posing different challenges, i.e., requires debug at runtime and is also driving requirements for redundancy, all of which will make prototyping of all types even more compelling than it is today already.

14.5. Prototyping future: automotive

Automotive is a very dynamic application area and particularly interesting in that it has complexity trends increasing in two different directions.

First, the overall complexity of a car as a combined device is already well exceeding that of wireless and consumer devices. Modern mid-range cars have at least 30 electrical/electronic systems with up to 100 microprocessors and well above 100 sensors. These processors will be combined into a network of so called engine control units (ECUs). With increased trends towards security and safety, as well as more and more video processing to assist driving, the number of ECUs and network complexity will grow much further and at an even faster pace. 1

Second, in automotive applications the complexity grows beyond just electronics, meaning the combination of electrical hardware and software. Automotive systems will also have to be developed which take into account mechanical effects and their interaction with electronics, so-called “mechatronics.” Clearly, any software in such a mechatronic system is very hardware-dependent indeed, once again increasing the need for prototyping.

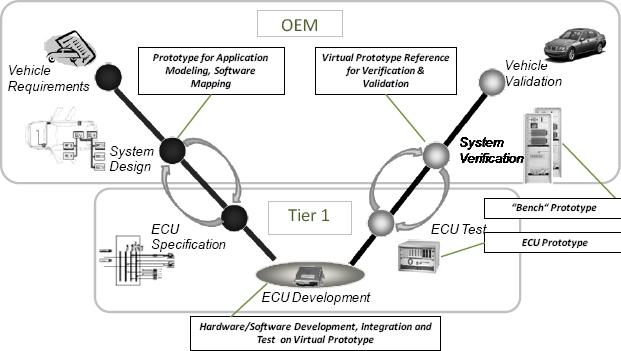

As in many other application areas, software plays a crucial role so that hardware is increasingly developed with the aim of optimizing the software’s efficiency. Figure 168 shows the V-Cycle often used to represent the automotive design flow as a design starts from the left and progresses to the right.

The V-cycle diagram is a simple way to illustrate the interaction between the original equipment manufacturers (OEMs) who eventually produce the complete vehicle and the so-called tier 1 suppliers who design and deliver the ECUs for the various vehicle sub-systems. We can see on the right that a vehicle requirement is developed and is modeled at the system level until ECU-level specifications can be produced. Then the ECU supplier develops their product based on the specification and delivers units for test in the overall vehicle prototype for validation alongside all the other ECU being developed for the new vehicle.

Figure 168 : The automotive V-Cycle and its relation to prototyping

Obviously communication and excellent project management is required between these two groups, and in the diagram above we can see two transitions at which communication between the various parties involved is crucial. However, growing complexity of electronic vehicle content is stressing traditional methods and it will be increasingly valuable for the communication between OEM and supplier to be more sophisticated in order to reinforce and illustrate the specification and deliverables.

Replacing paper specifications with executable virtual prototypes and FPGA-based prototypes will become mandatory to increase quality of communication and avoid costly turnarounds due to defects found to late in the design flow.

For example, if the specifications fro m OEMs to their ECU suppliers is imprecise then bugs are found during the integration phase, illustrated on the right side of the diagram, the development will have to go back all the way to the drawing board if defects cannot be corrected.

In addition, ECU developers need to be able to validate design trade-offs and efficiently interact with their suppliers and other ECU developers. Late changes in the specification can cause significant cost for correcting issues. The safety-critical nature of some of these vehicle functions is also demanding ever-more rigid and provable design and verification methodology, for software a well as hardware.

Figure 168 also illustrates the various stages at which prototyping would complement written or verbal communication with executable models of the systems, sub-systems and chips under development:

Before specifications are distributed to tier 1 suppliers, OEMs want to model the software applications and their effect on the system. Applications have to be modeled at high enough abstractions so that they can be mapped into early prototypes of hardware to be developed and effects like bus utilization, ECU and CPU utilization can be analyzed.

ECUs as sub-systems in themselves need to be prototyped to improve software development schedules by starting software development prior to hardware availability. The resulting prototypes (virtual, FPGA-based or using first silicon) can be used for hardware verification and system validation itself.

In the software world, once sub-systems are available and integrated, they are used for software testing as well. Hardware prototypes of the ECUs and sub-systems of ECUs are made available in complex test racks which are used for software development and testing.

Due to their networked nature, automotive systems are at least one order of magnitude more complex than, for example, consumer applications. Distributed simulation of virtual prototypes as well as increased capacity and scalability for FPGA prototypes will be key requirements to address the resulting capacity and speed issues. With the further increasing complexity and pressure on schedule timelines, more and more of these prototypes will become virtualized and already, hardware in the loop systems (HILS) are being used in conjunction with software simulation.

We can envisage completely virtualized systems in widespread use for high-level software validation, complementing FPGA-based or silicon-based prototypes which are located in main sites in smaller numbers, used for lower-level software validation.

Capacity, speed and early availability will be important selection criteria for the various prototyping options. Cost requirements will also drive further use of virtual prototypes for software development given that they have a lower cost point per seat than hardware-based prototypes, making them available in larger numbers.

In addition, safety requirements will change software development processes and make prototyping mandatory. Greater software content in vehicles means that software validation becomes very widespread, beyond pure development into areas of certification and safety compliance. For instance, let’s consider the development of the sub-system which angles the rear wheels depending on the vehicle speed around a curve. In the past it was based on pure mechanics and hydraulics and the safety of those components were well understood and could be verified by the appropriate testing authorities. Any attempt of replacing such a system with software on an ECU would require a great deal of software validation tests to be passed in order to pass safety certification, such as the emerging standards for software verification (MISRA) and predictable software development (ISO 26262). In such a validation-rich design environment as automotive, prototyping becomes a key factor as a means of early verification in repeatable design flows and an enabler for safety-critical software development.

14.6. Summary: software-driven hardware development

As outlined in the previous sections, the needs are different in the various application domains, but the overarching commonality is the trend towards more software development. In the future the actual system behavior in the majority application domains is mostly defined by software; that is certainly the case for those we explored in this chapter. As a result, hardware development will be driven to optimize the software’s execution.

Depending on the specifics of the application domains this has different effects. In mobile wireless and consumer, the underlying digital hardware is fairly generic, enabling software platforms like Google Android™, Apple® Mobile OS or Windows Mobile™ 7 to execute most efficiently and decouple the ecosystem of application developers from the actual hardware.

In contrast, in the networking application domain, the software still defines system behavior but it is much more hardware-dependent, embedded in the networking software stacks on Linux and other operating systems. However, software is still the key differentiator of a combined hardware/software platform.

Similarly, in automotive, due to the overall complexity, developers of hardware-dependent software often reside in independent companies. They need access to representations of the hardware for which they are developing software and this increases the need for virtual and FPGA-based prototypes.

There is no question that software will gain even more importance and that we are on cusp of quite significant changes in development methodologies as well as responsibilities within the design chains. How exactly the changes will manifest themselves, very much depends on the application domains. It is safe to assume that in all of them prototyping will play a key role.

14.7. Future semiconductor trends

Having considered the future from the viewpoint of certain key application areas, let’s cross-reference those predictions by taking a look at the trends in semiconductor development and production.

In chapter one we outlined the various semiconductor trends that have impacted SoC and other chip design up until now. We saw how these trends have increased the need for prototyping up until the present time. In this section we will complete that task and try to predict how those trends will continue over the next five years and what effect this will have on prototyping.

- Miniaturization: there is no clear end in sight to the miniaturization of silicon to achieve smaller silicon technology nodes. As a result, the complexity of the projects at the leading-edge technology nodes will be so complex that it is simply too risky to tape out without prototyping early and often. FPGA-based prototyping will support increased complexity as FPGA devices get larger, benefiting, and to some extent, driving those technology trends.

- Embedded CPUs: as the latest generations of FPGAs have family members which include embedded processors, it will be interesting to see if they will be used to run the embedded software in the system, rather than use a test chip of the CPU core(s) which the FPGA is prototyping. Perhaps the choice of CPU in the SoC might even be driven by its availability or otherwise in an FPGA format for prototyping.

- Decrease in overall design starts: this trend is widely expected to continue as the SoC production costs will make smaller technology nodes less accessible and will likely cause further consolidation in the semiconductor industry. With less design starts the remaining designs need to address more designs in order to re-coup the investment through more end applications. Virtual and FPGA-based prototyping will become even more necessary in order to mitigate risk of potential re-spins.

- Programmability: in the mobile wireless and consumer application domains the desire to de-couple software development from hardware dependencies will further increase. Virtual prototyping will also gain in importance as it allows software development to commence even earlier. SDKs will contain greater capabilities for software verification previously only accessible to host development.

- IP reuse: IP usage continues to increase, which is an easy prediction to make. The semiconductor analyst Gartner confirmed its most recent predictions that the amount of IP reuse will again double between 2010 and 2014. In addition, the trend to licensing complete sub-systems will grow and open a new area of prototyping for complete sub-systems containing an assembly of pre-defined hardware and software IP.

- Multicore processing: Adoption of multicore architectures will cause more pressure on analyzing and optimizing software parallelization. Today parallelization has been solved in specific application domains, such as graphics, but it is likely that different application-specific solutions will be required in other areas, such as networking and automotive electronics.

- Low power: today’s methods for reducing power in semiconductors are focused on implementation and silicon-level engineering. Future requirements will be better addressed by moving the focus of low-power design to the architectural design level. As a result virtual and FPGA-based prototypes will be instrumented to allow low-power analysis for early feedback on some aspects of the power design. For example, for activity capture and average dissipation over certain software functions.

- AMS design: an increase in the analog/mixed signal portion of chips will create even more demand to allow in-system validation. Virtual IO for virtual platforms and interfaces of FPGA-based prototypes to its environment will become more critical.

14.8. The FPGA’s future as a prototyping platform

As well as the previously mentioned trends which demand greater prototype adoption, there are trends in device and tool capability which allow us to keep up with that demand. FPGA devices are a classic illustration of Moore’s Law, as we mentioned before. New research will allow the use of multi-die packages and 3D technology to greatly increase the capacity of our leading-edge FPGA devices beyond Moore’s Law. However, this leap in capacity brings new challenges in connectivity. The ratio between internal resource and external IO will continue to cause visibility and connectivity issues for prototyping, accelerating novel solutions for multiplexing and debug tools.

The complexity of creating reliable and flexible FPGA boards using these new devices in ever-shorter project cycles will reduce the proportion of in-house boards compared to commercial boards. In-house boards will still be used for specific needs or to support very large numbers of platforms, or to support a large company in-house standard. However, we shall see most other deigns complete their current migration to ready-made boards, which itself will probably create a healthy and competitive market from which prototypers can select.

The future of FPGAs as the hardware prototyping platform of choice is secure and will be complemented with growing use of virtual prototypes. These will increasingly be combined into hybrid arrangements and we can expect a merging of the two approaches into a more continuous prototyping methodology from system to silicon in the future.

14.9. Summary

Given the analysis of the previous sections in this chapter, prototyping will become even more of a key element for future design flows. Interesting times are in store for providers of prototypes at all levels. Eventually both the hardware and software development worlds will grow closer together with prototypes – virtual, FPGA based and hybrid – being the binding element between both disciplines.

The authors gratefully acknowledge significant contributions to this chapter from:

Frank Schirrmeister of Synopsys, Mountain View